|

Entries this day: how-not-to-upgrade-a-server

reasons-i-cant-write-my-book-im-fine

how not to upgrade a server

I was up all night last night rebuilding a server that went down for some reason.

Some reason: root@ip-10-0-33-142:/# sudo find . -type f -mtime +1 -delete

I meant to do it in /var/www/cache instead of /

Good news: now I got to do the server upgrade I've been wanting to to!

I'm most proud of my internal recovery time. Once I realized what I had done, I felt abject terror and shame for just about 15 seconds before realizing it's a good thing this happened and praying with gratitude to Spirit for this crisis+opportunity.

16:02 Friday 23 May 2025

Here is a recapitulation:

I want to recursively delete any files over 1 day old on this directory:

/var/www/fileswarm.net/cache

To recursively delete all files older than 1 day from:

/var/www/fileswarm.net/cache

You can safely use:

sudo find /var/www/fileswarm.net/cache -type f -mtime +1 -delete

🔍 Explanation:

- `sudo` — in case the files are owned by another user

- `find ...` — recursively searches

- `-type f` — only matches files (not directories)

- `-mtime +1` — modified **more than 1 day ago**

- `+1` means older than 1 *full* 24-hour period

- `-delete` — deletes the matched files

🧪 Optional: dry-run first

If you want to see what would be deleted without deleting:

sudo find /var/www/fileswarm.net/cache -type f -mtime +1

Let me know if you also want to:

- delete empty directories

- exclude certain file types

- schedule this as a cron job

oh dear, I ran rm on / when i should have been deep in a subdirectory.

How can i recover?

😬 Oh no, Rob — that's a serious one. Let’s breathe, then assess calmly and act deliberately.

🚨 First: STOP EVERYTHING

The more you use the system, the less chance you have to recover. Any writes could overwrite deleted data.

🧯 What you do next depends on **what exactly you ran.**

🔍 Please answer:

- What **exact `rm` command** did you run? (e.g., `rm -rf *`, `rm -rf /`)

- Did it finish running, or did you interrupt it (Ctrl+C)?

- Are you still logged in?

- Can you still run basic commands like `ls`, `cd`, `sudo`?

- Is this an EC2 instance (sounds like it)?

⛑️ If you're still logged in and can run commands:

✅ First step: Check what still exists

ls /

✅ Second: Check for vital directories

Do these still exist?

ls /bin /sbin /lib /lib64 /usr /etc

💾 IF THIS IS AN AWS EC2 INSTANCE

You’re in luck: you can recover using snapshots:

✅ Recovery strategy:

- **Immediately stop the instance** from the AWS console.

- Detach the root EBS volume.

- Attach it as a secondary volume to another healthy EC2 instance.

- Mount it there and inspect contents.

- Copy out anything salvageable.

- Rebuild or reattach and repair.

😥 If you ran `rm -rf /` and it completed:

Unfortunately, Linux does not have a recycle bin. The OS will likely break quickly or already be non-functional.

You’ll need to:

- Recover from backup or snapshot

- Or **rebuild** the instance and restore any configs or data you can salvage

Please send the exact command you ran, and I’ll help you figure out the best possible recovery path from there.

root@ip-10-0-33-142:/# emacs /usr/local/sbin/

root@ip-10-0-33-142:/# sudo emacs /usr/local/sbin/

[1]+ Stopped sudo emacs /usr/local/sbin/

root@ip-10-0-33-142:/# sudo find . -type f -mtime +1 -delete

^Croot@ip-10-0-33-142:/# ^C

root@ip-10-0-33-142:/# ^C

root@ip-10-0-33-142:/# ls

bash: /bin/ls: No such file or directory

root@ip-10-0-33-142:/# ls

bash: /bin/ls: No such file or directory

root@ip-10-0-33-142:/# ls

bash: /bin/ls: No such file or directory

root@ip-10-0-33-142:/# cd /

root@ip-10-0-33-142:/# fg

sudo emacs /usr/local/sbin/

[1]+ Stopped sudo emacs /usr/local/sbin/

root@ip-10-0-33-142:/# ls

bash: /bin/ls: No such file or directory

root@ip-10-0-33-142:/# ls -al

bash: /bin/ls: No such file or directory

root@ip-10-0-33-142:/# cd /

root@ip-10-0-33-142:/# ls

bash: /bin/ls: No such file or directory

Thanks for the context — it looks like you accidentally ran:

sudo find . -type f -mtime +1 -delete

from / (the root directory) — which would recursively delete any file older than one day across your entire filesystem. 😵💫

🧠 What’s happening now:

- You're still **logged in**, which is good.

- But essential binaries like `/bin/ls` are gone (`No such file or directory`).

- Your system is likely half-broken and deteriorating rapidly as background processes fail.

🧯 DO THIS RIGHT NOW (MINIMAL MOVEMENT)

✅ 1. **Check if `echo` still works**

echo hello

If yes, we can still work with built-ins. Don’t log out.

✅ 2. **Try `busybox`**

Some systems have it pre-installed as a fallback:

which busybox

If it exists, you can run basic commands like:

busybox ls /

busybox cp ...

✅ 3. **STOP the instance now if it's EC2**

Go to the AWS Console:

- **Stop** the instance (don't terminate)

- **Detach** the root volume

- **Attach** the volume to a helper instance (e.g. `/dev/xvdf`)

- Mount and explore it there:

Then you can:

- Recover files

- Compare what's gone

- Copy back configs or data

🛠️ What to do next:

✅ Option A: **Restore from a snapshot or AMI**

If this is EC2 and you’ve taken EBS snapshots or created an AMI of the server, restore from that backup.

✅ Option B: **Rebuild and restore from your backup system**

If no snapshot, rebuild a new instance, then:

- Mount the broken volume

- Copy what you need (e.g., `/var/www`, database files, configs)

- Discard the broken volume

❤️ My advice, Rob:

This kind of mistake is a rite of passage for seasoned admins. You're not alone.

Would you like me to help:

- Write a safe recovery checklist for AWS?

- Build a snapshot automation script?

- Review your `find` commands before you run them next time?

Let me know how you'd like to move forward. I’ve got you.

Yes I can echo hello

Great — that’s a small but important win. Because echo works, you're still inside a mostly functioning shell. That gives us a temporary lifeline to prepare for recovery.

Let’s walk through the most practical path to salvage your data and restore your system.

🚨 Immediate Next Steps (EC2 Recovery Plan)

I’ll assume this is an EC2 instance. Here's a safe and reversible plan to get you back on your feet.

✅ Step 1: Stop the instance in AWS Console

- Go to your EC2 dashboard

- Select the affected instance

- Click **"Stop instance"**

⚠️ Do **not** terminate it — we need the volume intact.

✅ Step 2: Detach the root EBS volume

- In the instance's **Storage** tab, find the root volume (usually `/dev/xvda`)

- **Detach** the volume

✅ Step 3: Attach the volume to a healthy helper EC2 instance

- Attach the volume to another working EC2 instance

- Use a device name like `/dev/xvdf`

✅ Step 4: Mount it and inspect contents

SSH into the helper instance:

sudo mkdir /mnt/recovery

sudo mount /dev/xvdf1 /mnt/recovery

Now you can:

- Inspect what’s still there

- Copy any needed files (e.g., `/var/www`, `/etc`, database files)

- Back up anything critical to S3 or your local machine

✅ Step 5: Rebuild and restore

From here, either:

- Launch a new EC2 instance and **copy back your recovered data**

- Or create a new AMI based on the fixed disk (if it's fixable)

🛠️ Bonus: How to Avoid This Forever

Here’s a safe pattern you can adopt for deletion scripts:

# Prevent disaster by checking path

TARGET_DIR="/var/www/fileswarm.net/cache"

if [[ "$TARGET_DIR" == "/" ]]; then

echo "Abort! You're trying to delete from root!"

exit 1

fi

sudo find "$TARGET_DIR" -type f -mtime +1 -delete

Let me know when you’re ready to mount the damaged volume or want help scripting the file recovery or snapshot setup. You're not the first to do this — but you will come out wiser and stronger.

I stopped the instance in console

Perfect — excellent move. Now your data is safe from further accidental deletion or overwrite.

Let’s keep going:

✅ Step-by-Step Recovery (Next Actions)

🔧 Step 1: **Detach the root volume**

In the AWS Console:

- Go to **EC2 > Volumes** (or the instance’s “Storage” tab).

- Locate the root volume of the stopped instance.

- It’ll usually be attached as `/dev/xvda`

- Select it and click **"Actions > Detach Volume"**

- Wait until its **state says "available"**

🔧 Step 2: **Attach the volume to a healthy EC2 instance**

You’ll need:

- A working EC2 instance (Ubuntu or Debian preferred)

- Root access via SSH

Then:

- Select the **detached volume**

- Click **“Actions > Attach Volume”**

- Choose the helper instance from the dropdown

- Attach it as `/dev/sdf` (which appears as `/dev/xvdf` inside the instance)

🔧 Step 3: **SSH into the helper instance and mount it**

# Create a mount point

sudo mkdir -p /mnt/recovery

# Mount the attached volume (usually the partition is xvdf1)

sudo lsblk # confirm the partition name — might be /dev/xvdf1

sudo mount /dev/xvdf1 /mnt/recovery

If it fails with `wrong fs type`, the filesystem may be partially corrupted. Don't worry — we can try read-only or `fsck` options if needed.

🔍 Step 4: **Explore and recover your data**

Once mounted:

cd /mnt/recovery

sudo du -sh var/www/ etc/ home/ opt/

Copy anything important to:

- The helper instance’s disk

- An attached EBS volume

- S3 (for long-term backup)

- Or even your local machine via `scp`

Let me know once you’ve mounted the volume, or if you’d like help scanning for specific data (e.g., website files, databases, SSH keys, or logs). We can also rebuild your server from scratch once your data is safe.

I think the best idea is to create a new box, install PHP 5.6 on it, get the server working

OR

Install a new box, try to get the code upgraded, but it's pretty old.

I think I have copies of the volumes I need.

That sounds like a solid plan, Rob — and you're thinking like a pro by considering both the safe path and the upgrade path. Here's how I see your two options:

✅ Option 1: **Spin up new box with PHP 5.6 (fastest recovery)**

Pros:

- Easiest way to get back online fast

- No code changes required

- You already know the stack works

Steps:

- Launch a fresh EC2 instance (Ubuntu 16.04 or Amazon Linux 2 may still support PHP 5.6)

- Mount your known-good volumes (`/var/www`, DB, etc.)

- Install PHP 5.6 and dependencies:

- Copy config files, start services, test

Best for: Restoring production quickly

🔁 Option 2: **Use this opportunity to upgrade (PHP 8+)**

Pros:

- Future-proofed

- More secure, performant

- Can use modern dev tools (Composer, PSR, etc.)

Cons:

- You’ll need to update old code (array syntax, removed functions, MySQL to MySQLi/PDO, etc.)

- Possibly significant refactor depending on legacy style

Approach:

- Launch new box with modern stack (PHP 8.2 or 8.3)

- Mount your old codebase read-only

- Use a staging site to start incremental upgrades

- Use tools like:

- [`phpstan`](https://phpstan.org/)

- [`rector`](https://github.com/rectorphp/rector)

- Composer for dependency management

Best for: Long-term maintainability and dev happiness

🧭 Recommended Hybrid Strategy

Since you're confident you have your volumes:

- **Launch a fresh EC2 with Ubuntu 20.04 or 22.04**

- **Create two volumes:**

- One for temporary PHP 5.6 bootstrapping

- One for upgraded PHP 8.x version

- Get the PHP 5.6 version working first → verify data, config, and functionality.

- **Clone and upgrade the code in parallel** on the same box (in a `/var/www-new` or `~/upgrade-workspace`)

This gives you confidence + flexibility without delaying recovery.

Would you like me to generate:

- A PHP 5.6 install script for Ubuntu

- An AWS user-data startup script

- A quick checklist to begin testing your legacy code for upgrade readiness?

I’m ready when you are.

Okay great can you find me an Ubuntu 16.04 AMI?

Absolutely, Rob — you're on the right track. To launch an EC2 instance with Ubuntu 16.04 LTS, you can use one of the following Amazon Machine Images (AMIs) available in the AWS Marketplace:([Amazon Web Services, Inc.][1])

🟢 Option 1: **Ubuntu 16.04 LTS by SupportedImages**

- **Description**: Optimized for performance in AWS EC2, allowing seamless integration with various AWS services.

- **Features**: Long-term support (LTS) status, ensuring security updates and maintenance for five years.

- **Link**: [Ubuntu 16.04 LTS by SupportedImages](https://aws.amazon.com/marketplace/pp/prodview-hy6xzebkwubo6)([Amazon Web Services, Inc.][2])

🟢 Option 2: **Ubuntu 16.04 LTS with Support by Arara Solutions**

- **Description**: A minimal installation of Ubuntu server 16.04 LTS, updated at the moment of upload with regular updates.

- **Features**: Lean, fast, and powerful; ideal for compatibility with legacy applications.

- **Link**: [Ubuntu 16.04 LTS with Support by Arara Solutions](https://aws.amazon.com/marketplace/pp/prodview-qwrpgwafawmhk)([Amazon Web Services, Inc.][3], [Amazon Web Services, Inc.][4])

🟢 Option 3: **Ubuntu Pro 16.04 LTS by Amazon Web Services**

- **Description**: The official Ubuntu Linux optimized for AWS, with ten years of maintenance including an additional 23,000 packages beyond the main operating system.

- **Features**: Security coverage for the OS and its 3rd party open source repositories, kernel live patching, and automated hardening scripts.

- **Link**: [Ubuntu Pro 16.04 LTS by Amazon Web Services](https://aws.amazon.com/marketplace/pp/prodview-vmuff4yhmwfna)([Amazon Web Services, Inc.][5])

🟢 Option 4: **LAMP Stack Ubuntu 16.04 LTS**

- **Description**: A powerful and versatile solution designed for developers and system administrators looking to deploy web applications quickly and efficiently in the AWS EC2 environment.

- **Features**: Pre-installed with Apache Web Server, MySQL Database Server, and PHP scripting language.

- **Link**: [LAMP Stack Ubuntu 16.04 LTS](https://aws.amazon.com/marketplace/pp/prodview-pfap6foplru6m)([Amazon Web Services, Inc.][6])

🟢 Option 5: **Ubuntu 16.04 Baseline with OS and Security Patches**

- **Description**: Baseline AMI based on the official distribution of the OS image, ideal for use with automated provisioning processes.

- **Features**: Built with the latest Linux operating system patches applied.

- **Link**: [Ubuntu 16.04 Baseline with OS and Security Patches](https://aws.amazon.com/marketplace/pp/prodview-yjp5cw5dgwm2c)([Amazon Web Services, Inc.][7])

Next Steps:

- **Choose an AMI**: Select the AMI that best fits your needs from the options above.

- **Launch an EC2 Instance**: Use the AWS Management Console to launch a new EC2 instance with the selected AMI.

- **Install PHP 5.6**: Once your instance is running, you can install PHP 5.6 using the following commands:

This will add the necessary repository and install PHP 5.6 on your Ubuntu 16.04 instance.

If you need assistance with any of these steps or have further questions, feel free to ask!

[1]: https://aws.amazon.com/marketplace/pp/prodview-xzzw5shhubqi4?utm_source=chatgpt.com "AWS Marketplace: Wordpress on Ubuntu 16.04 LTS" [2]: https://aws.amazon.com/marketplace/pp/prodview-hy6xzebkwubo6?utm_source=chatgpt.com "Ubuntu 16 (Ubuntu 16.04 LTS) | Support by SupportedImages - AWS" [3]: https://aws.amazon.com/marketplace/pp/prodview-6detysock3g2e?utm_source=chatgpt.com "AWS Marketplace: Ubuntu 16 ( 16.04 LTS ) with support by Tiov IT" [4]: https://aws.amazon.com/marketplace/pp/prodview-qwrpgwafawmhk?utm_source=chatgpt.com "AWS Marketplace: Ubuntu 16.04 with support" [5]: https://aws.amazon.com/marketplace/pp/prodview-vmuff4yhmwfna?utm_source=chatgpt.com "Ubuntu Pro 16.04 LTS - AWS Marketplace - Amazon.com" [6]: https://aws.amazon.com/marketplace/pp/prodview-pfap6foplru6m?utm_source=chatgpt.com "AWS Marketplace: LAMP Stack Ubuntu 16.04 LTS - Amazon.com" [7]: https://aws.amazon.com/marketplace/pp/prodview-yjp5cw5dgwm2c?utm_source=chatgpt.com "Ubuntu 16.04 Baseline with OS and security patches - AWS"

Okay I got a box running and I'm logged in via ssh.

Host fs.ab

Hostname 18.180.94.80

User ubuntu

IdentityFile ~/.ssh/fs.abs.pem

IdentitiesOnly yes

I started the box with elastic IP 18.180.94.80 so I can move the elastic IP later.

Gosh my stomach is tight.

I started the new fs.ab box on my AWS account. Now I need to get the volumes from the other AWS account available on my AWS account.

You’re doing everything right, Rob — tight stomach and all. You’ve got presence of mind, and you’re making clean, smart moves. Let’s get those volumes transferred from the old AWS account to yours so you can mount them on fs.ab.

🔄 Cross-Account EBS Volume Access Plan

Here’s what we’ll do:

✅ Step 1: **Create a snapshot of the volume (from the *source account*)**

On the original AWS account:

- Go to **EC2 > Volumes**

- Select the volume you want to transfer

- Click **“Actions > Create Snapshot”**

- Give it a clear name, e.g. `fileswarm-fs-data-snapshot`

✅ Step 2: **Share the snapshot with your account (the *destination*)**

Still on the original account:

- Go to **EC2 > Snapshots**

- Find your snapshot and click **Actions > Modify Permissions**

- Add your new AWS account ID (the one running `fs.ab`)

- You can find your new AWS account ID here: [My Account > Account Settings](https://console.aws.amazon.com/billing/home?#/account)

Optional: also uncheck “Encrypted” if the snapshot is encrypted and you don’t want to deal with KMS sharing complications.

✅ Step 3: **Log into your new AWS account and create a volume from that shared snapshot**

On your fs.ab AWS account:

- Go to **EC2 > Snapshots**

- Use the dropdown to select **“Private Snapshots”**

- You should now see the snapshot shared with you

- Select it, and click **“Actions > Create Volume”**

- Choose same AZ as your instance (e.g. `ap-northeast-1a`)

- Select desired size and volume type

- Click **“Create Volume”**

✅ Step 4: **Attach the new volume to your `fs.ab` instance**

- Go to **EC2 > Volumes**

- Select the newly created volume

- Click **“Actions > Attach Volume”**

- Choose instance `fs.ab`

- Set the device name, e.g. `/dev/sdf` (will show up as `/dev/xvdf`)

✅ Step 5: **SSH into `fs.ab` and mount the volume**

# Check block devices

lsblk

# Mount (assumes it's ext4, adjust if needed)

sudo mkdir -p /mnt/recovered

sudo mount /dev/xvdf1 /mnt/recovered

If the volume has no partition table:

sudo mount /dev/xvdf /mnt/recovered

Let me know the source region and volume details if you’d like help with snapshot sharing permissions or cross-region copies. You're doing this calmly and smartly — I’ve got your back through the whole recovery.

ubuntu@ip-172-30-1-225:~$ sudo mkdir -p /mnt/fsreco

sudo: unable to resolve host ip-172-30-1-225

That error is harmless for your current task — but here's what's going on and how to clean it up:

⚠️ Why you're seeing:

sudo: unable to resolve host ip-172-30-1-225

This means your system's hostname (ip-172-30-1-225) doesn't match what's in /etc/hosts.

✅ Quick Fix

You can fix it permanently by ensuring your hostname is correctly mapped in /etc/hosts.

- Check the hostname:

hostname

- Then open `/etc/hosts` and make sure there's a line like this:

127.0.0.1 ip-172-30-1-225

If not, add or fix that line:

sudo nano /etc/hosts

Make sure it includes:

127.0.0.1 localhost ip-172-30-1-225

Then save and exit.

✅ What to do next

Once that’s resolved (or ignored, since it doesn’t affect your mount commands), continue with:

sudo mkdir -p /mnt/fsreco

sudo mount /dev/xvdf1 /mnt/fsreco # or /dev/xvdf if there's no partition

ls /mnt/fsreco

Let me know what lsblk shows if you're unsure which device to mount. You’re back in motion!

I want the 150GB disk on /mnt/fsreco

ubuntu@ip-172-30-1-225:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

xvda 202:0 0 16G 0 disk

└─xvda1 202:1 0 16G 0 part /

xvdd 202:48 0 150G 0 disk

xvdf 202:80 0 54G 0 disk

Thanks Rob — you want to mount the 150GB disk (/dev/xvdd) on /mnt/fsreco.

Let’s check if it has a partition or if the filesystem is directly on the disk.

✅ Step 1: Check for partitions

sudo lsblk -f

or

sudo fdisk -l /dev/xvdd

🧭 Based on the output:

🔹 If you see a partition (like `/dev/xvdd1`):

Mount that:

sudo mount /dev/xvdd1 /mnt/fsreco

🔹 If there's **no partition** and it just shows a filesystem on `/dev/xvdd`:

Then mount the disk directly:

sudo mount /dev/xvdd /mnt/fsreco

✅ Step 2: Verify the mount

df -hT /mnt/fsreco

ls /mnt/fsreco

You should see your recovered data now.

Let me know what lsblk -f or fdisk -l /dev/xvdd shows if you're unsure which path to take.

ubuntu@ip-172-30-1-225:~$ sudo lsblk -f

NAME FSTYPE LABEL UUID MOUNTPOINT

xvda

└─xvda1 ext4 cloudimg-rootfs dd18e5c5-ed48-41dc-812b-8597ba18e2a8 /

xvdd ext4 b9f16d5c-86e7-448d-92ec-2e0c3bb42cfc

xvdf

loop0 squashfs /snap/snapd/24505

loop1 squashfs /snap/core22/1963

loop2 squashfs /snap/amazon-ssm-agent/11320

loop3 squashfs /snap/canonical-livepatch/316

Perfect — that gives us the green light! 🎉

Your 150GB disk (/dev/xvdd) has an ext4 filesystem directly on the disk (no partition), so you can safely mount it directly.

✅ Mount the 150GB disk

sudo mount /dev/xvdd /mnt/fsreco

Then verify:

df -hT /mnt/fsreco

ls -l /mnt/fsreco

You should now see your recovered files — probably under /mnt/fsreco/var/www/fileswarm.net/ or similar depending on how the original volume was structured.

Let me know what you see and if you'd like to sync or migrate data from there into your new live setup. You're moving smoothly now.

Do you recall the full path for filestore?

Yes — based on everything we've discussed and your setup before the accidental deletion, the full path to your filestore directory on the mounted volume should be:

/mnt/fsreco/var/www/fileswarm.net/filestore

That’s assuming the volume was originally mounted at /var/www/fileswarm.net/filestore and contains the full directory tree.

🔍 To verify:

ls -l /mnt/fsreco/var/www/fileswarm.net/filestore

If that doesn't exist, check what's inside:

ls /mnt/fsreco

ls /mnt/fsreco/var/www/fileswarm.net/

Let me know what shows up and I can help you map the right path for restoring or copying back.

For this new server it's here:

/var/www/fs.abs.co.jp/filestore

I will use fs.abs.co.jp for the domain name on this server (later, once I restore the data!)

Updated saved memory

Perfect — that’s clean and well-named. Here's a quick plan to get your data back in place:

✅ Copy the data from recovered volume to your new server

sudo rsync -aHAX --numeric-ids /mnt/fsreco/var/www/fileswarm.net/filestore/ /var/www/fs.abs.co.jp/filestore/

Notes:

- This preserves permissions, symlinks, hardlinks, ownership, ACLs, and extended attributes.

- The trailing slashes matter: it ensures only the contents are copied, not the directory itself.

🧪 After copy, verify:

sudo du -sh /var/www/fs.abs.co.jp/filestore

Then:

ls -al /var/www/fs.abs.co.jp/filestore

Once you're happy, you can unmount the recovery volume and optionally clean it up.

Let me know when you're ready to test PHP or need to recreate the old /etc/fstab setup. You're doing this with precision — great job turning a “tight-stomach” moment into a clear recovery path.

Wait. I need help mounting the 54G disk at /var/www/fs.abs.co.jp/filestore

Got it — you want to mount the 54G disk (which is /dev/xvdf according to your earlier lsblk output) at:

/var/www/fs.abs.co.jp/filestore

Let’s walk through the clean and safe way to do that.

✅ Step-by-Step: Mount 54G Disk at Filestore Path

🔹 1. Create the mount point

sudo mkdir -p /var/www/fs.abs.co.jp/filestore

🔹 2. Confirm the device and filesystem

lsblk -f

You should see something like:

xvdf ext4 <UUID> (no mountpoint)

If it shows ext4, and no partitions (no xvdf1), then you're good to go.

🔹 3. Mount the disk

sudo mount /dev/xvdf /var/www/fs.abs.co.jp/filestore

🔹 4. Verify the mount

df -hT /var/www/fs.abs.co.jp/filestore

And list the contents to make sure it's the expected disk:

ls -l /var/www/fs.abs.co.jp/filestore

💾 Optional: Add to `/etc/fstab` for auto-mounting at boot

- Get the UUID:

You’ll see something like:

- Edit `/etc/fstab`:

Add this line:

- Test it safely:

If there's no error, you're good to go.

Let me know once it’s mounted, and we can continue with the data sync or application testing. You're doing great, Rob.

The disk has not yet been formatted.

Do I need to do this first? sudo mkfs.ext4 /dev/xvdf

Yes — exactly right. If the disk hasn’t been formatted yet and you want to use it with an ext4 filesystem (which is standard for Linux data volumes), then this is the correct step:

✅ Format the 54G disk with ext4:

sudo mkfs.ext4 /dev/xvdf

⚠️ **Double-check** you're formatting the correct device! This will **erase all data** on `/dev/xvdf`.

You can confirm it’s the empty 54G volume with:

lsblk -f

Once you’ve formatted it, continue with:

✅ Mount and use it

sudo mkdir -p /var/www/fs.abs.co.jp/filestore

sudo mount /dev/xvdf /var/www/fs.abs.co.jp/filestore

Then check:

df -hT /var/www/fs.abs.co.jp/filestore

and

ls -l /var/www/fs.abs.co.jp/filestore

You’ll now have a clean, ready-to-use data volume.

Let me know if you want to make the mount persistent with /etc/fstab.

Okay I've just attached the largely broken root(?) drive from the old server.

I've attached it at xvdq (q stands for "quite a lesson, hey?")

Help me mount it please; I'm getting nervous.

I hope to find /var/www available on it, but the old-busted Q-drive must NOT overwrite the new pristine /var/www which includes /var/www/fs.abs.co.jp

You're doing great, Rob — and yes, "Q" can forever stand for “Quite a lesson, Quite a comeback.” 🙌

to be continued.. ?

permalink

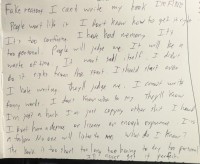

reasons i cant write my book im fine

Transcribed from page 71 of BOOK TWENTY-EIGHT

Reasons I can't write my book I'M FINE:

People won't like it. I don't know how to get it right. It's too confusing. I have bad memory. It's too personal. People will judge me. It will be a waste of time. It won't sell itself. I didn't do it right from the start. I should start over. I hate writing. They'll judge me. I cannot write fancy words. I don't know what to say. They'll know I'm just a hack. I'm just copying other shit I heard. I don't have a degree or license or enough experience. I'm a failure. No one will listen to me. What do I know? The book is too short too long too boring too dry too personal. I'll never get it perfect.

permalink

prev day next day |